Mobile and Ubicomp

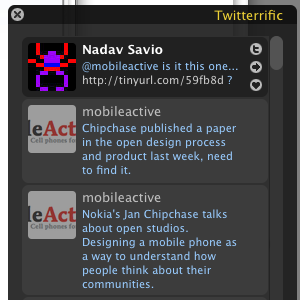

Just had an interesting interaction. A colleague, while watching a talk, posted to twitter from her phone (I assume) that she wanted to find a document by the speaker. I was at my laptop and went and found it and replied with the URL. The interesting thing here from a mobile design perspective is the idea that the network optimized this task of finding the resource. It would have been difficult for her to do on her phone but it was trivial for me. I don’t know what to call this: micro-lazyweb or “responsibility shifting” or something. Oh, the link: Jan Chipchase - Future Perfect: (Nokia) Open Studios

Just had an interesting interaction. A colleague, while watching a talk, posted to twitter from her phone (I assume) that she wanted to find a document by the speaker. I was at my laptop and went and found it and replied with the URL. The interesting thing here from a mobile design perspective is the idea that the network optimized this task of finding the resource. It would have been difficult for her to do on her phone but it was trivial for me. I don’t know what to call this: micro-lazyweb or “responsibility shifting” or something. Oh, the link: Jan Chipchase - Future Perfect: (Nokia) Open Studios

I’ve been meaning to write up my experiences with a couple of mobile phones for a while. I used a Nokia 6682 for a year or so and recently switched over to a Sony k790a.

My experience with the k790 is a great lesson in why product managers and designers continue to fight about whether features or experience should drive product development. I bought the phone primarily because I wanted a phone with a “real” camera and at the time it was (arguably) the best cameraphone out there (3.2 megapixels, auto-focus, xenon flash, etc.). I did lots of research and so on and bought it primarily on a features basis. After using the phone and camera for a few weeks, however, I realized that while the k790 has an amazing camera for a phone, especially for stills, it’s going to be a while before any cameraphone is going to hold a candle to a decent dedicated point-and-shoot camera (e.g. Canon Elph) let alone a digital SLR. So much for features.

So, you’d think I would have been disappointed but in fact I had already grown to love the phone based solely on the software interaction design. It’s among the most impressive pieces of small-device UI design I’ve encountered (and that includes the iPod which is obviously amazing, but has a much, much easier job description). It’s far from perfect, of course, and there are some patently silly things as with all phones (for example, when looking up contacts, you can only search by first name rather than first or last, so typing “JO” gives me “John Zapolski” but not “Oliver Johns”). But, there are so many places where the designers got it right. And, what I mean by getting it right is that they understood the context the phone would be used in, figured out the most likely thing or things that you’d want to do, and then designed the interface to make those things either extremely easy or automatic. I regularly find myself pleasantly surprised by the interface which is rare indeed, as we all know from countless bad experiences. And, as an interaction designer myself, I can tell you that it is incredibly difficult.

It’s the little things like when scrolling vertically through a list of your contacts you can then scroll horizontally through their different contact methods (home phone, work phone, email) and choose the one you want. It (mostly) just feels incredibly fluid.

By contrast, the Nokia suffers from having to support a lumbering, generalized operating system so that I have the option of downloading lunar calendars, golf handicapping systems, and all kinds of other pseudo-useful Symbian applications but at the expense of doing the things that matter with any degree of subtlety.

(Note: This was all written pre-iPhone, which generally out-fluids the Sony.)

A while back I was thinking about the overlapping layers of context within which mobile interactions take place (more on the word “mobile” in another post). I quickly sketched out a visual model to help me (and other designers working on situated experiences) keep these various layers in mind. Here’s the resulting paper (200k pdf), which my collaborator, Jared, will be presenting later this year at Mobile HCI in Singapore.

And, here’s the abstract:

Designers of mobile applications have long understood that mobile devices are operated within a context of significant constraints and environmental distractions. Despite this knowledge, however, many mobile applications are designed as if they were merely shrunken desktop or Web applications.

To encourage consideration of the specifics of context for mobile interactions and to highlight new user-meaningful opportunities latent in always-on, always-carried devices, this article describes a context model for mobile interaction and a set of design heuristics for successful mobile interactions.

Feedback welcomed!

Jared and Yue have written a nice overview of our ongoing Mobile China research for UIGarden. From their conclusion:

In contrast to the expectations of many of our technology clients, we have found in our research that youth discourse about telephones focuses much more heavily on emotions rather than technology and features. The implications for designers of mobile devices and services is to focus more on the human side of this emerging technology, new youth identities, and popular desire for entertainment, fashion and companionship.

It occurred to me the other day that Scott McCloud’s point about the web’s infinite canvas applies (and is vastly more important) to annotating physical space. Any given physical location needs both multiple categories of information (e.g. basic information, reviews, history, etc.) and also infinite instances of each. And it’s going to get real messy real fast, given both the variety of data (where’s the nearest bathroom vs. Mark Twain slept here vs. I love this place vs. danger: asbestos!) and the imprecision inherent in this kind of layering (did Mark Twain sleep here or over here or maybe right there?). I don’t see any way to do that other than to open it up and let the world have at it.

I’ve been bothered by the raft of mobile informational services that don’t take into account the real context of use. When would I ever want to find something as generic as “pizza” in a given location? OK, maybe ice cream, but still while walking around a city I either want something really specific (e.g. where’s the nearest Blue Bottle coffee) or something more general but of an editorially (or socially) vetted quality (e.g. show me a cool place to read for an hour near here). So, I have to give a shout out to MizPee, which is an old idea (and probably not implemented right), but at least it’s something immediately useful enough to get me to bother with the mobile web.

We’ve been up in Mendocino this past week at a cabin overlooking cliffs and white surf. Beautiful and rustic. And part of the beautiful, rustic cabin is a charming wood-burning stove in which a roaring fire was built. The trouble, of course, with charming wood-burning stoves in which one has built a roaring fire is that they get very hot and, especially when you (or, say, your darling son) are 2 years old or so, it is easy to forget this fact and, fascinated by the pretty, pretty flames, put your tiny hand on the corner of the stove. What ensues - screaming, parents running to the fridge for ice, more screaming, hugs, sobbing, etc. - is generally presented to the victim as a handy lesson (“now you know” etc. etc.), but the trouble is that next time he’s really just about as likely to put his hand back on the stove because (and this is the point) the stove doesn’t look hot. Hot is red (like fire). Hot is glowing (like embers) or waving (like air over a highway). But the stove is just dull and black. Oh yeah, and by the way, it’s 400-freaking degrees!

So, what’s my point. Well, this is a case where it makes sense to me to add in some information technology. To augment reality. To ubiquitously compute. How straightforward it would be if the stove (or, say, that scalding pot handle you were about to grab because, well, handles afford grabbing) was glowing bright red.

Which leads to my First Law of Information Technology for Objects: it makes sense to augment objects with information technology when doing so enables the objects to communicate useful information about themselves which would otherwise be difficult or harmful to detect. (There are other reasons, of course, such as to enable objects to make use of information about other objects, etc. but I only felt like making up one law).

Of course, there are issues that arise when you start augmenting things. You have to think about what you might be covering up with your informational “overlay.” For example, there was another rustic cabin I spent a night in, this one up in Humboldt, where we built a roaring fire in a wood-burning stove. No one touched this stove, despite the presence of certain vegetable inebriants, partly because we let the fire get so hot that the stove did start glowing red (all by itself). And, if some technological augmentation had turned it red at, say 250 degrees, maybe we wouldn’t have noticed it creep up to 750 (or wherever iron starts to melt) and it would have burnt the cabin down). This is probably a solvable design problem (multiple shades from yellow to white as it gets hotter, for example), but the point remains: careful what you subtract when you start adding.

Postscript: There’s a companion Law of Minimal Ugliness in Ubicomp, something to the effect that we need to consider the aesthetics of all information manifestation to avoid augmenting ourselves into ugliness (otherwise known as the Phoenix Principle).

So, the “first feature-length film shot on a mobile phone” looks about how you’d imagine a feature film shot on a mobile to look. But it doesn’t do so gratuitously (well, ok, no way am I going to watch the whole thing, but you quickly get the aesthetic point: voyeuristic, degraded, subjective, paranoid). I always like these moments of low-res injected into the endless march to higher definition, when the first people use the limitations of a transitional medium for art. (thanks, Tina)

WineM is a nicely-put-together “technology sketch” (basically a video of an idea for a product) from Mike Kuniavsky’s new company, ThingM. WineM is a winerack which basically uses RFID to associate bottles with UPCs to layer information on the collection of bottles it contains and then allows you to “browse” your collection (with admirable immediacy) along the standard wine facets (varietal, vintage, region, etc.). I link to it not because Mike’s a friend, but because people are always asking me “what’s all this about ubicomp… is that like surfing the web from your fridge?” and WineM is a perfect example. It’s cool, but it’s also way useful, technology enhancing the experience rather than technology becoming the experience. Well done, Mike and Tod.

I am fairly dismayed at the lengths mobile network operators will go to cajole, trick, or force consumers to use higher paying services, all in fealty to the cruel god ARPU. For example, Cingular modified the OS on its Nokia 6682 so that after you take a picture you no longer get a menu option to send via email as a way of trying to get people to send more (high-revenue) MMS messages. I suspect the result is that most people just curse under their breath as they go through the 10 steps it now takes to send via email or else they install a 3rd-party app such as the excellent Shozu. Either way, Cingular gets nothing.

And there’s now some data to back up the assumption that the “walled garden” is a misguided strategy: MobileCrunch recently pointed to an Opera report showing that “in direct contradiction of operator’s fears, the use of the Opera browser - which circumvents the “walled garden” that many operators attempt to maintain as a means of further monetizing data customers - is clearly increasing the ARPU that operators receive based upon customer data usage.” Hah!